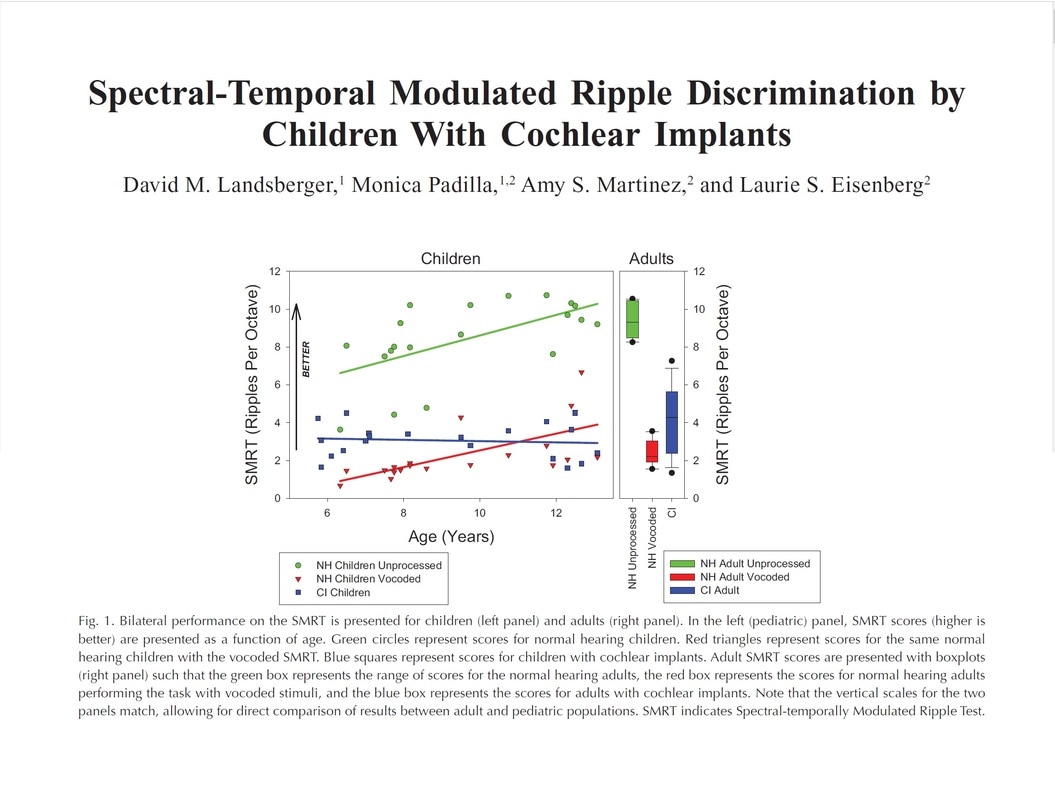

New Paper: Spectral-Temporal Modulated Ripple Discrimination by Children With Cochlear Implants7/7/2017 This new paper shows that spectral resolution improves with age for normal hearing children (green dots/line), but not with children with cochlear implants (blue dots/line). Furthermore, adults with cochlear implants (that lost their hearing after developing language; blue box plot) have better than spectral resolution than children with cochlear implants. As children with cochlear implants can perform very well, the data suggests that children and adults with cochlear implants may differently weight cues. If so, this would suggest that implanted children may benefit from different cochlear implant processing strategies than adults.

This paper is the first pediatric paper out of the EAR Lab as well as our first from our collaboration with Laurie Eisenberg and Amy Martinez at the University of Southern California. Landsberger, D. M., Padilla, M., Martinez, A. S., and Eisenberg, L. S. (2017). "Spectral-Temporal Modulated Ripple Discrimination by Children With Cochlear Implants," Ear Hear. pdf

0 Comments

A brand new version of the SMRT software (1.1.3) has been released. The SMRT is a measure of spectral resolution that correlates with speech measures (Holden et al., 2016; Zhou, 2017). It is available to download for free and is easy to use. Details on the test can be found in Aronoff and Landsberger (2013). New features of SMRT 1.1.3:

This evening, David Landsberger spoke to the New York City Chapter of the Hearing Loss Association of America. He spoke about "Cochlear Implants: Where we started and where we are going." The best part of his talk was about Rod Saunders who was the first person to receive a prototype of the Nucleus cochlear implant in 1977 and a personal hero for the lab.

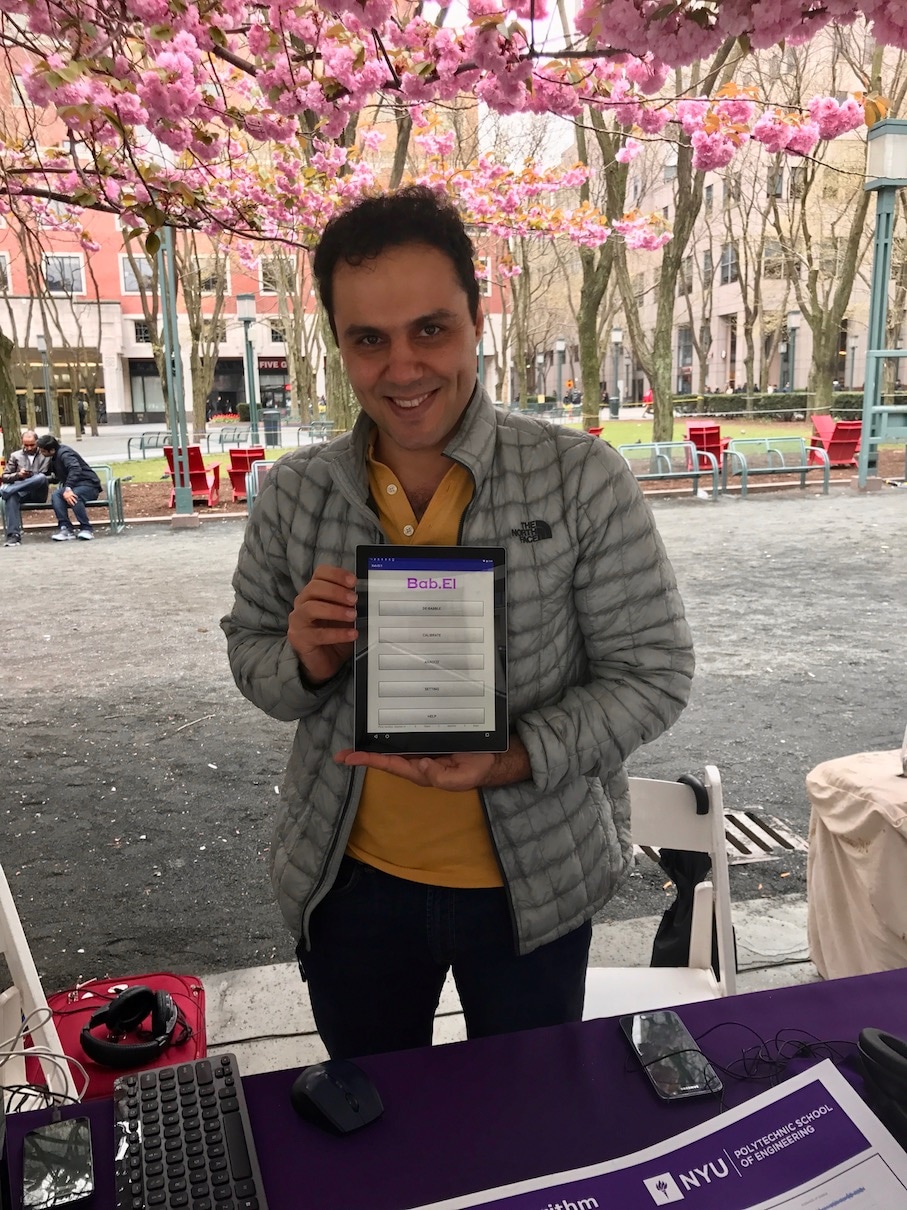

For those of you who don't know, the HLAA is a fantastic organization and highly recommend to those with hearing loss (or an interest in hearing loss). Thanks to Rick Savadow for the photo! Congratulations to Roozbeh Soleymani! He has won second place at the NYU Tandon Research Expo for his development of the SEDA noise reduction algorithm and its implementation in the Bab.el software.

The below text (and above photo) is taken from article written about the event by technical.ly: Roozbeh Soleymani, a doctoral student in electrical engineering, won second place for his app Bab.El, on which he collaborated with two NYU faculty members. The app, which is not yet available publicly, eliminates what’s known as babble noise: unintelligible background speech that can make it difficult to decipher the main conversation. That’s especially a challenge for people who have cochlear implants, devices that restore hearing to deaf individuals. Bab.El works in real time to reduce interfering babble noise on phone calls, as Soleymani demonstrated using a noise generator. Hiro of Columbia University came to his old stomping ground today to check out EAR Lab and give a talk. His talk focused on the delivery of drugs to the inner ear via round window perforations with arrays of tiny needles. We learned among other things that guinea pig round windows are shaped like Pringles.

Here is a new video explaining the our new stimulation mode, the Virtual Tripole (in blue) from our new paper (Padilla et al., 2017). The Virtual Tripole (or VTP) is a current focused virtual channel that can increase the sites of stimulation beyond the number of physical electrodes (like a Monopolar Virtual Channel used in Fidelity 120) and hopefully also reduce the spread of excitation (like a Tripole). The text from the video is as follows: In this video, we illustrate the Virtual Tripole mode introduced in Padilla et al. (2017) and compare it to typical virtual channels (i.e. Monopolar virtual channels). Both the Virtual Tripole and the Monopolar Virtual Channel are current steered stimulation modes used to provide more sites of stimulation than there are physical electrodes. In the top right panel, you can see the modeled spread of excitation from a Monopolar Virtual Channel (in black) and a Virtual Tripole in Blue as the peak of stimulation shifts across the cochlea. Both modes successfully provide a continuum in peaks of stimulation across the array. However, like a traditional tripole, the virtual tripole should provide a narrower spread of excitation. Hopefully, when implemented in a sound coding strategy, this will limit channel interaction and improve the perception of spectral information. The bottom two panels on the right side illustrate how the stimulation modes are generated. Each red point indicates an electrode and each arrow indicates the amplitude (and phase) of stimulation at each location. The black arrows illustrate current steering using a traditional Monopolar Virtual Channels. The blue arrows illustrate current steering using the Virtual Tripole. As can be seen, the virtual tripole is effectively a traditional tripole generated by three virtual channels instead of three physical electrodes. On the left, a monopolar virtual channel is compared to Quadrupolar Virtual Channels. Quadrupolar virtual channels are an early attempt to create a current focused virtual channel that were first introduced in Landsberger and Srinivasan (2009). The Monopolar virtual channel is indicated in black while the Quadrupolar Virtual Channel is indicated in magenta. Unlike the Virtual Tripole, the Quadrupolar Virtual Channel uses fixed location out-of-phase stimulation for the current focusing. The result is that the distribution moves asymmetrically as it is steered across the array. Perhaps even more troublesome is that when stimulation shifts from between one electrode pair to the next, the shape of the distribution jumps and can cause pitch reversals. For further details on the Virtual Tripole stimulation mode, consult Padilla et al. (2017). Thank you to Leo Litvak for providing his model from Litvak et al. (2007) which was used to generate this animation and to Chi-young Kim for writing the earliest version of the code to generate these animations. EARLab is proud to announce the publication of a new paper: (Download here)

Padilla, M., Stupak, N., and Landsberger, D. M. (in press). "Pitch Ranking with Different Virtual Channel Configurations in Electrical Hearing," Hearing Research. pdf. We introduce a new stimulation mode, the virtual tripole (VTP). The VTP is a tripole created from virtual channels to simultaneously provide current steering and current focusing. Hopefully using VTPs in a sound coding strategy provides the benefit of virtual channels (e.g. Fidelity 120) with the benefit of tripolar stimulation (e.g. Srinivasan et al., 2013). In this paper, we document that previous attempts at current focused virtual channels (e.g. the Quadrupolar Virtual Channel; Landsberger and Srinivasan, 2009) can provide an asymmetric distribution along the cochlea which can lead to pitch reversals across the cochlea and deviations from a correct tonotopic map. However, the VTP is specifically designed to provide a symmetrical distribution and therefore successfully maintains tonotopic organization. We conclude that the VTP is likely to be a good stimulation mode choice for a strategy providing both current steering and focusing. Please take a read of the paper! EARLab is proud to be a part of new paper with the Medical University Hannover and Advanced Bionics!

Nogueira, W., Litvak, L., Landsberger, D. M., and Buchner, A. (2016). "Loudness and Pitch Perception using Dynamically Compensated Virtual Channels," Hear Res. epub ahead of print pdf Previous work has shown that using two electrodes simultaneously can create what is effectively an electrode between the two phyiscal electrodes (i.e. a "Virtual Channel" or an MPVC). This is the trick that Advanced Bionics uses to create 120 channels with 16 electrodes using Fidelity 120. Current focusing (like tripolar stimulation) does not increase the number of channels, but can make the each of stimulation from each electrode more "accurate" (i.e. a reduced spread of neural excitation) as shown in Landsberger et al. (2012) and can produce better speech understanding in noise as shown in Srinivasan et al. (2013). In our lab, we have previously combined the virtual channel concept with current focusing using a stimulation mode called the Quadrupolar VIrtual Channel (e.g. Landsberger and Srinivasan, 2009). While current focusing is quite promising, it is also power inefficient and can drain a battery quickly. In the current paper, a new stimulation mode (the Dyamically Compensated Virutal Channel or DC-VC) is presented which is designed to provide current steering (like a virtual channel), current focusing (like tripolar stimulation), while being more power efficient. The trick is to "dynamically" control the amount of current focusing depending on the amplitude / loudness of the DC-VC. Please take a read of the paper! A video from Oticon Medical for the Music and CI Symposium has found its way online. This was truly a remarkable event that was dedicated exclusively to music perception with cochlear implants. EAR Lab members, Ann Todd and David Landsberger, were in attendance. You can spot both of them (as well as many other leaders in the field) in the video. David was even interviewed twice.

Thanksgiving week started on the right foot with a visit from Alan Kan and Tanvi Thakker from the University of Wisconsin. Alan gave a talk at the NYU Cochlear Implant Center and then Tanvi gave a talk to EAR Lab as well as Mario Svirsky's lab. As can be expected, the talks evolved into long and productive discussions.

|

EAR LabThis is where we provide updates from the lab Archives

December 2018

Categories |

RSS Feed

RSS Feed